|

|

BMe Research Grant |

|

Kálmán Kandó Doctoral School of Transportation and Vehicle Engineering

Department of Material Handling and Logistics Systems

Supervisor: Dr. Szirányi Tamás

LIDAR Based Vision of Autonomous Machines in Extreme Cases

Introducing the research area

Nowadays, autonomous vehicles and mobile machines are in a significant development stage, both on public roads and in industrial environments. These machines are very often equipped with LIDAR sensors for environment sensing purposes. The primary task of these sensors is free space and object detection, but state of the art research has shown that point clouds generated by these sensors are appropriate for extracting higher-level semantic information [1]. Their application, however, is limited, the occlusion and distance of objects, the resolution of the sensors, etc. strongly limits the scope of recognition algorithms. I suggest solutions for various limiting cases, thus extending the range of the sensors.

Brief introduction of the research place

The research was carried out at the Vehicle Vision Laboratory of the Department of Material Handling and Logistics Systems of the BME-KJK and at the MTA SZTAKI’s Machine Perception Research Laboratory. The extensive profile of the Department ranges from logistic processes to design and automation of construction and material handling machinery. The department's vehicle vision laboratory primarily carries out research and development in the field of environment perception of mobile machines.

History and context of the research

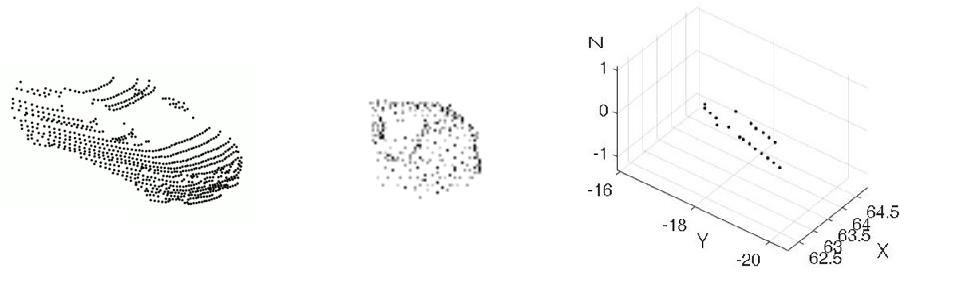

The 2.5D point clouds produced by LIDAR sensors have a certain characteristics due to their working principle. In the point cloud obtained as a result of the measurement, the objects are made up of planar or approximately planar sections in the vertical direction. These results in the object can be used for the classification in case of close and densely sampled objects [2]. However, we should not ignore the cases when an object is only partially visible, or only in a few distinct segments. The decision should be made as quickly as possible because the machines are moving. Fig. 1 shows the point cloud types for which I proposed classification solutions. Instead of the earlier, complete shape-based approaches, the classification methods I used are based on local properties.

Fig. 1 Car in different LIDAR measurements, from left to right: Velodyne HDL-64E measurement from Sidney Urban Objects dataset [3], partial point cloud and car from two LIDAR segments (I have been dealt with point cloud type of the last two).

The research goals, open questions

The goal of the research was to develop methods that can solve the problem of partial point cloud recognition. In the case of 2D image processing, methods of these types were already available (in many cases, they were the basis of the methods which I developed in 3D). However, under certain visual conditions we cannot rely on cameras, so we need to use the available sensors as efficiently as possible. One of the questions to be answered was whether it was possible to identify objects that were only partially present on the recording or those that might not fulfill the sampling criterion in vertical direction. In addition, more practical questions have arisen, like what information – if there’s any – could complement the gaps in the available data and what methods might be appropriate to solve the problem.

Methods

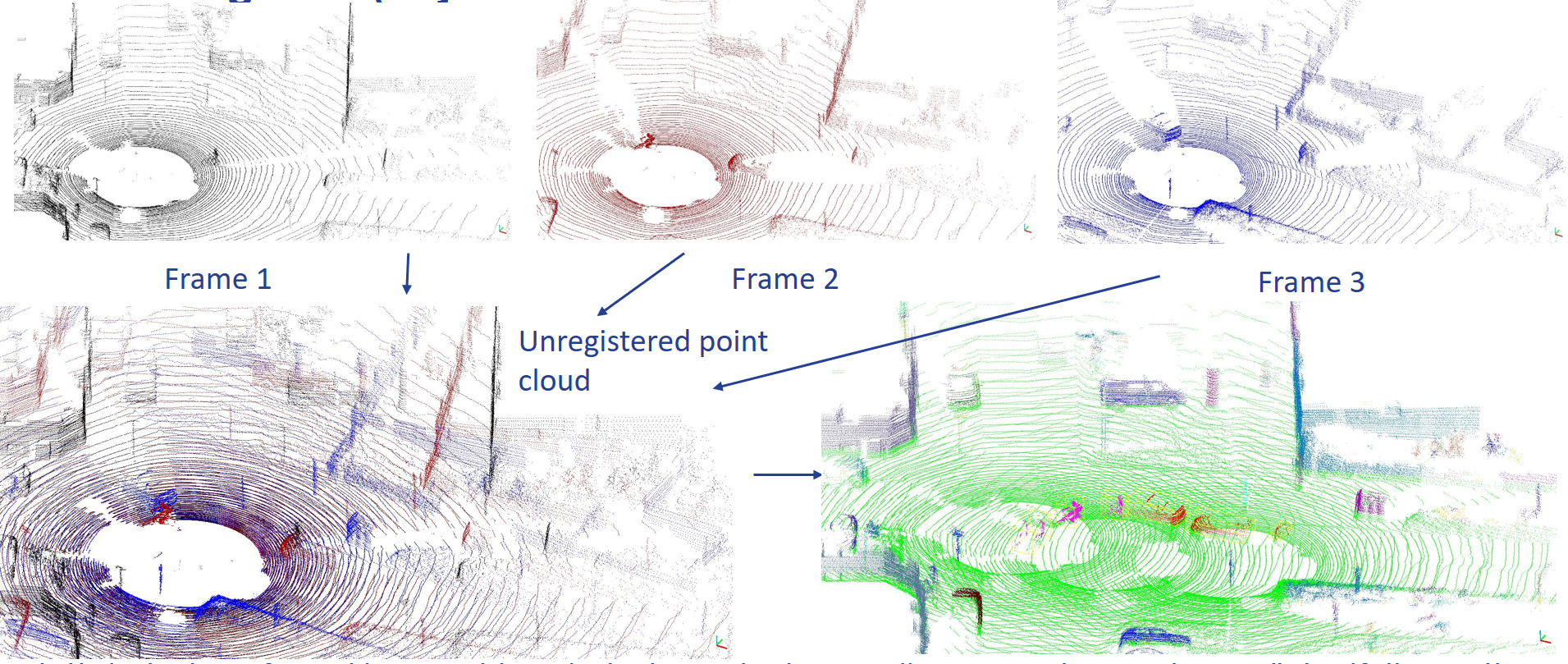

On the one hand, I built on the basic methods of point cloud processing. The most important methods I used for preprocessing: ICP (Iterative Closest Point) [4] for registering point clouds, MSAC (M-estimator SAmple and Consensus) [5] for ground detection, Euclidean clustering [6] for object segmentation, M3C2 (Multiscale Model to Model Cloud Comparison) [7] for change detection and the Hungarian algorithm [8] for object tracking (Fig. 2). In addition, I have been using 2D image processing methods several times and extended them, such as the Harris Key Point detector [9], Fourier Descriptor for shape description [10] or Bag of Features Based classification [11].

Fig. 2 Results of point cloud preprocessing. The first line shows three consequent LIDAR frame (indicated with different colors), the bottom left figure illustrates these on top of each other without registration. The last figure is the final result, point clouds and objects had been registered into one global coordinate frame, ground detection had been done (green color) and moving objects had been tracked (the same objects are indicated with the same color)

Results

The main results of the research include two methods offering a solution to the classification of LIDAR point clouds in extreme cases.

In the first case, we can see only partial objects, but in the vertical direction the point cloud assumed to be dense enough. This kind of point cloud can be acquired for example by registering consequent frames. The tests show that with this method, small part of the object (about 20 % of the whole object) can result in a much more accurate prediction about the object than available methods. The results can be continuously refined as we explore the object as well. After keypoint detection and clustering, semi-local patterns can be defined, and the frequency of these patterns can be used to classify the partial objects.

Fig. 3 illustrates a possible configuration for registration of consequent frames and a resulting point cloud. Fig. 4 shows the registration at work (first the actual perception of the environment then its registration in a global coordinate frame), and also the class of the predicted object [S1] [S2] [S3].

Fig. 3 Human shape registered from planar LIDAR data during movement (Source: own measurement) and a possible sensor configuration to acquire this (Source: SICK - Efficient solutions for material transport vehicles in factory and logistics automation)

Fig. 4 Data registration and obstacle (cyclist) prediction (the object is classified in 5 steps, the blue color indicates that the current prediction is cyclist category)

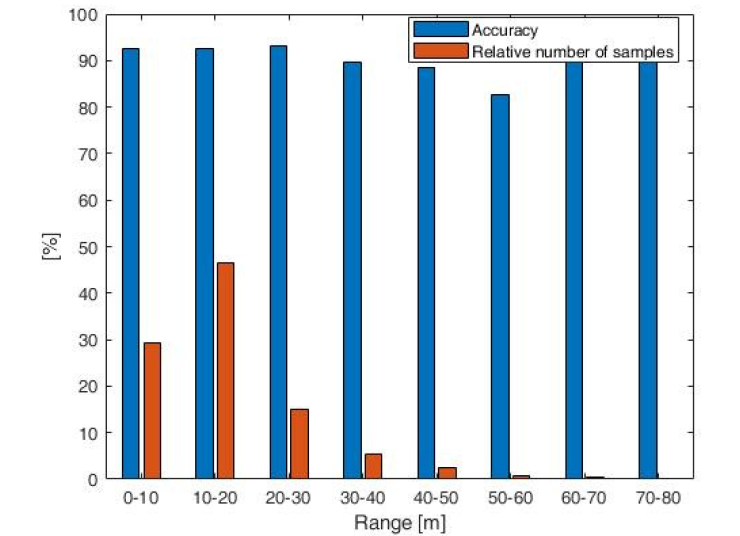

For the second method registering the frames is not required, it can predict the object class even from a few (or even one) LIDAR segments. Tests show that this method allows higher accuracy compared to the conventional methods and it can also be used in cases where other ones cannot. Thus the detection range of the LIDAR sensor is increased [S4] [S5]. The basis of the method is a Fourier based descriptor which can consider the segments change both in vertical direction and over time. The classification is done by a neural network, and voting is done at the object level. Among others, tests were made on the KITTI database [12]. Fig. 5 shows the influence of object-sensor distance on the method, while Fig. 6 illustrates how the method works on a point cloud sequence.

Fig. 5 Accuracy of the method with respect to different distances. The distance practically has no effect on accuracy (other methods ignore objects further than 20-30 m away)

Fig. 6 The proposed method, color map: green - car, blue - cyclist. For the visibility, the method is illustrated on a dense cloud (it provides results comparable to the state of the art in such cases, too), but its real advantage is that it works in extreme cases as well

Expected impact and further research

I have proposed solutions to environmental perception problems which have previously limited the usability of LIDAR sensors. Using the proposed methods, we can classify occluded, partially visible, and far-field LIDAR point clouds with poor vertical resolution, too. This research can significantly facilitate the wider spread of LIDAR sensors and their application to new tasks, thus promoting autonomous machine research. Further, I plan to investigate other extreme cases where the applicability of the sensor for some reason is limited, e.g. under bad weather conditions.

Publications, references, links

List of corresponding own publications

[S1] Z. Rozsa; T. Sziranyi, “Exploring in partial views: Prediction of 3D shapes from partial scans”, in: IEEE 12th IEEE International Conference on Control and Automation, ICCA 2016

[S2] Z. Rozsa; T. Sziranyi, “Object detection from partial view street data” In: IEEE 2016 International Workshop on Computational Intelligence for Multimedia Understanding (IWCIM)

[S3] Z. Rozsa; T. Sziranyi, “Obstacle Prediction for Automated Guided Vehicles Based on Point Clouds Measured by a Tilted LIDAR Sensor”, IEEE Transactions on Intelligent Transportation Systems 19: 8 pp. 2708–2720. , 13 p. (2018)

[S4] Z. Rozsa; T. Sziranyi, “Street object classification via LIDARs with only a single or a few layers”, In: Third IEEE International Conference on Image Processing, Applications and Systems (IPAS 2018), (2018) pp. 1–6. , 6 p.

[S5] Z. Rozsa; T. Sziranyi, “Object detection from a few LIDAR scanning planes”, IEEE Transactions on Intelligent Vehicles, in press (2019)

Table of links

Department of Material Handling and Logistics Systems

List of references

[1] D. Maturana and S. Scherer, “VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition,” in IROS, 2015

[2] A. Borcs, B. Nagy, and C. Benedek, “Instant object detection in Lidarpoint clouds,”IEEE Geoscience and Remote Sensing Letters, vol. 14,no. 7, pp. 992–996, July 2017.

[3] M. De Deuge, A. Quadros, C. Hung, B. Douillard, “Unsupervised Feature Learning for Classification of Outdoor 3D Scans” In Australasian Conference on Robotics and Automation (ACRA), 2013.

[4] Besl and N. D. McKay, “A method for registration of 3-D shapes, ”Pattern Analysis and Machine Intelligence, IEEE Transactions in, vol. 14, no. 2, pp. 239–256, Feb 1992.

[5] P. Torr and A. Zisserman, “Mlesac: A new robust estimator with application to estimating image geometry, ”Computer Vision and Image Understanding, vol. 78, no. 1, pp. 138–156, 2000.

[6] R. B. Rusu, “Semantic 3D object maps for everyday manipulation inhuman living environments,” Ph.D. dissertation, Computer Science Department, Technische Universitaet Muenchen, Germany, October 2009.

[7] D. Lague, N. Brodu, and J. Leroux, “Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the rangitikei canyon (n-z),”ISPRS Journal of Photogrammetry and Remote Sensing, vol. 82, no. Supplement C, pp. 10–26, 2013

[8] . L. Miller, H. S. Stone and I. J. Cox, “Optimizing Murty’s ranked assignment method, ”IEEE Transactions on Aerospace and Electronic Systems, vol. 33, no. 3, pp. 851–862, July 1997

[9] A. Kovács and T. Szirányi, “Improved Harris feature point set for orientation-sensitive urban-area detection in aerial images,” IEEE Geosci. Remote Sens. Lett., vol. 10, no. 4, pp. 796–800, Jul. 2013.

[10] J. Cooley, P. Lewis, and P. Welch, “The finite Fourier transform, ”IEEE Transactions on Audio and Electroacoustics, vol. 17, no. 2, pp. 77–85, Jun 1969.

[11] G. Csurka, C. R. Dance, L. Fan, J. Willamowski, and C. Bray, “Visual categorization with bags of keypoints,” in Proc. Workshop Statist. Learn. Comput. Vis. (ECCV), 2004, pp. 1–22.

[12] A. Geiger, P. Lenz, R.l Urtasun, “Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite”, Conference on Computer Vision and Pattern Recognition (CVPR), 2012