|

|

BMe Research Grant |

|

Doctoral School of Informatics

Department of Control Engineering and Information Technology

Supervisor: Dr. SZEMENYEI Márton

Efficient Neural Network Pruning Using Model-based Reinforcement Learning

Introducing the research area

Deep neural networks have demonstrated outstanding performance in solving various computer vision tasks over the past decade. However, their application is often hindered by their high computational demands and large size, which stem from their huge number of parameters. This is a particularly significant issue for resource-constrained devices, such as mobile phones or embedded systems. Additionally, as a result, many efficient AI solutions are only accessible to researchers and users who have expensive, high-performance graphics processors. It is therefore extremely important to develop a neural network pruning system that can be robustly applied across different architectures and domains.

Brief introduction of the research place

The Computer Vision and Machine Learning Research Group is located at the Department of Control Engineering and Informatics at the Budapest University of Technology and Economics. Its mission is to create intelligent vision systems and image processing methods, as well as to research the applications of these solutions. The knowledge gained from research within the group is transferred to numerous different application areas. Among these, notable fields include intelligent robots, autonomous vehicles, geoinformatics, smart cities, and simulation-based learning systems. The research group also has several high-performance graphics processors, providing an excellent foundation for research related to neural networks.

History and context of the research

To address the challenges posed by the enormous number of parameters in neural networks, one possible and currently utilized method is traditional neural network pruning [1]. In this approach, we identify weights to be removed from the trained, accurate model by using handcrafted rules, aiming to preserve accuracy as much as possible. However, the search rules limit the mapping of the problem to a certain domain, thereby reducing the likelihood of finding the optimal solution. Moreover, such solutions are not general; they are tailored to a specific neural network architecture and database (domain) in each case. The problem can also be approached using reinforcement learning, where a neural network-based agent attempts to find the optimal weights to be removed without human interaction. This approach is currently an open topic in the literature, but the main drawback of existing solutions is that the two most important environmental variables for the agent – the model's sparsity and accuracy degradation – are determined at run time by actually pruning the model and testing the reduced model, greatly slowing down the training process [2][3]. Thus, this solution also does not offer a general, fast, and efficient solution for pruning neural network architectures.

The research goals, open questions

My goal is to pave the way for the widespread applicability of neural network-based solutions in modern digital vision systems with lower computational capacity. I aim to develop a pruning system that, compared to existing solutions, can produce the pruned version of the input network in significantly less time and is generally applicable across different domains and neural network architectures, to the best of my knowledge, has not been achieved in the literature.

Questions to be addressed during the research:

- Is it possible to significantly accelerate the process of neural network pruning using reinforcement learning compared to existing methods in the literature?

- Is it possible to achieve at least as good, or better pruning results with reinforcement learning as with traditional pruning rules for a widely used object detector?

- Is it possible to generalize the proposed reinforcement learning-based automated pruning system across datasets?

- Is it possible to generalize the proposed reinforcement learning-based automated pruning system across different neural network architectures?

Methods

To achieve the set goals and answer the questions, I am designing an innovative reinforcement learning-based automated pruning system for widely used YOLO-type object detectors [4]. Unlike existing solutions, during the training of the agent, instead of lengthy pruning and testing to determine environmental variables, a so-called state predictor neural network predicts them. Its task is to estimate the accuracy degradation and sparsity of the pruned model based on the number of deleted parameters and the reduction factor chosen for each layer. The state predictor network is trained on automatically generated data.

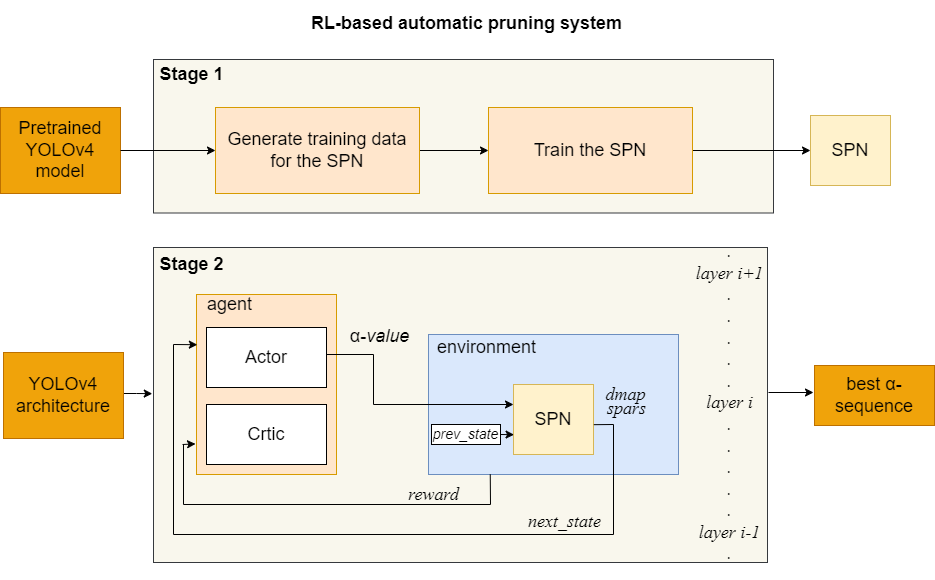

The operation of the proposed system is illustrated in Figure 1. The state predictor network (SPN) is trained using the trained detector network. After that, by passing the architecture of the model to be pruned to the reinforcement learning agent, it learns the optimal pruning strategy with the help of the state predictor network. At the end of the training, a pruning factor is obtained for each layer of the network, which is used to prune the model in a single step.

Figure 1. Flowchart of the proposed reinforcement learning-based automated neural network pruning system.

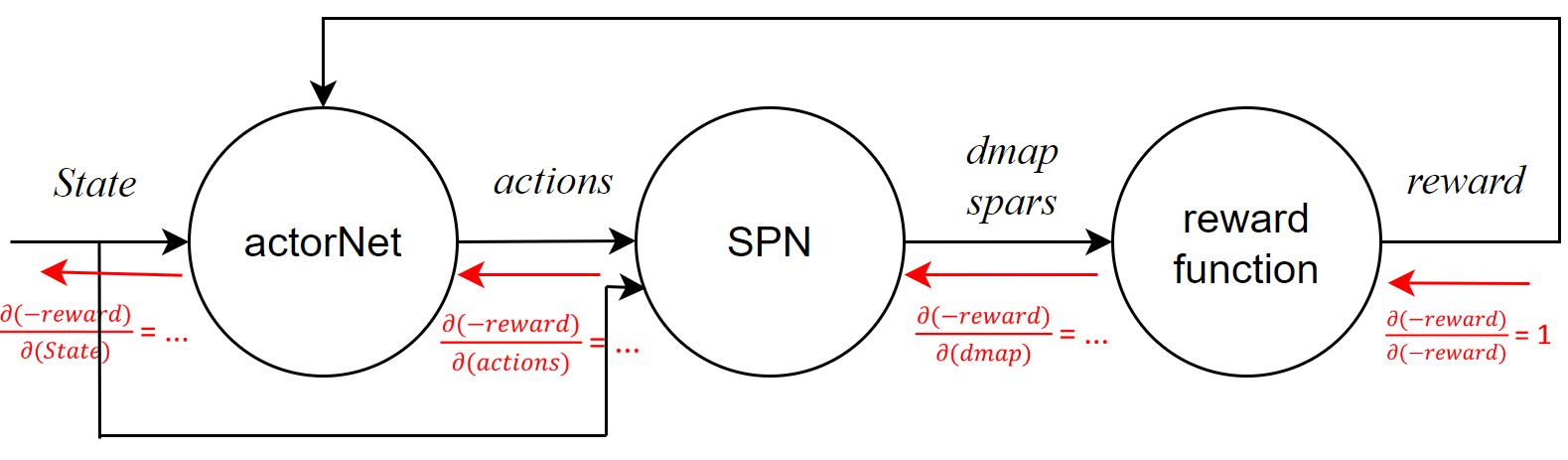

It is also important to highlight that the application of the state predictor network enables the use of model-based, multi-agent reinforcement learning in the pruning system, greatly speeding up the process of searching for ideal weights to remove (Figure 2).

Figure 2. Computational graph between the agent and the reward function.

Contrary to most pruning methods, the proposed system performs structured pruning, meaning it removes entire filters from the architecture instead of zeroing out individual weights [5], thus facilitating significant acceleration of the reduced model's execution even on graphics processing units (GPUs).

To achieve generalization across datasets, I would fine-tune the state predictor network on a new database. For the generalization across architectures, instead of manually mapping dependencies between layers in the model architecture, I would employ a library that automatically performs this task within the system [6]. However, this later requires that the state predictor network can also handle dynamic inputs since the number of prunable layers varies in different architectures which becomes one of the inputs for the state predictor network. To achieve this, I would replace the existing state predictor network with a transformer architecture. Furthermore, I plan to conduct online training of the agent; the state predictor network will continuously undergo fine-tuning on the data generated during training. This ensures the robustness of the state predictor network, making it less sensitive to unknown patterns and resulting in more stable training.

Results

In my previous work, I implemented a reinforcement learning-based system that enables efficient pruning of a modern object detector while reducing the training time of the agent. Unlike existing solutions, involving a lengthy process to determine environmental variables, I employ a so-called state predictor neural network in the system. Its task is to estimate the accuracy degradation and sparsity of the pruned model based on the number of deleted parameters and the reduction factor chosen for each layer. The state predictor network is trained on automatically generated data before training the agent.

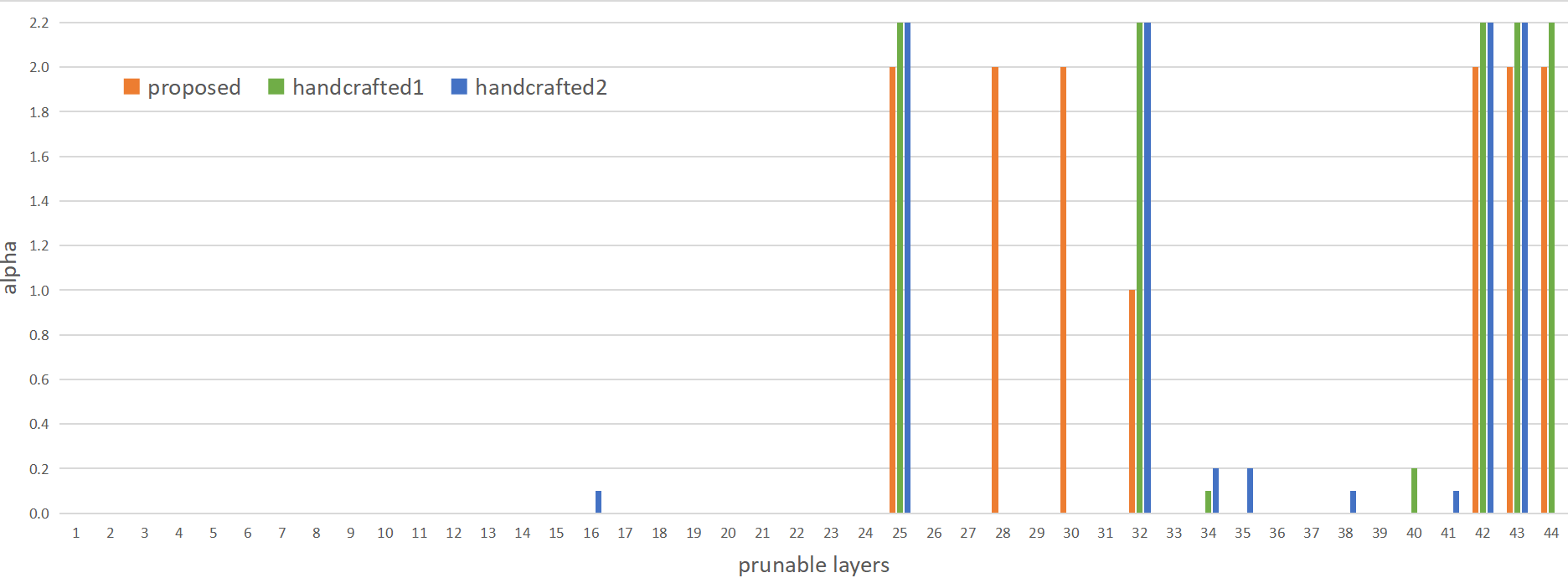

With the proposed pruning system, I successfully obtained a pruned version of the YOLOv4 object detector trained on the KITTI traffic dataset. This pruned version contains 61% fewer weights compared to the initial model, with only a 3.6% decrease in accuracy. This outstanding result greatly surpasses random pruning, which resulted in a 100% decrease in accuracy, as well as traditional pruning rules evaluated: the best-performing rule achieved a 3% decrease in accuracy while removing only 40.3% of the weights.

Figure 3. Comparison of different pruning rules. The x-axis represents the layers of the network, while the y-axis depicts the chosen pruning factor. The traditional rules are custom-designed, relying on the common assumption that the larger a layer, the larger the pruning factor should be applied to it. Handcrafted Rule 1 (green), Handcrafted Rule 2 (blue), Strategy found with the proposed method (orange).

The proposed method yielded significant results in terms of the speed of the pruning system as well: the application of the state predictor network reduced the total development time by a factor of 12 compared to using the state-of-the-art reinforcement learning-based method for the task.

The results so far demonstrate that the research has yielded positive outcomes for the first two questions under consideration. I have successfully implemented an innovative automated pruning system, which was effectively applied to a new task (pruning of object detectors).

Expected impact and further research

Due to the reduced size of the neural network models obtained with the proposed neural network pruning system, they become applicable in embedded systems, resource-constrained devices, and cases where processing speed is critical. The public release of this solution also holds a significant industrial impact, as a general pruning system can be easily integrated into proprietary systems. Additionally, the use of the system makes reinforcement learning-based automatic pruning accessible to researchers who do not have expensive, high-performance graphics processors, as none of its parts require large GPU memory.

At the current stage of the research, the next steps involve investigating the generalization capability both in terms of domains and architectures.

Publications, references, links

Publications

Blanka Bencsik and Márton Szemenyei: Multi-Domain Structural Pruning with Reinforcement Learning, Proceedings of the Workshop on the Advances in Information Technology 2024., Budapest, BME Irányítástechnika és Informatika Tanszék, pp 133–141

Bencsik, B.; Reményi, I.; Szemenyei, M.; Botzheim, J. Designing an Embedded Feature

Selection Algorithm for a Drowsiness Detector Model Based on Electroencephalogram

Data., Sensors 2023, 23, 1874. https://www.mdpi.com/1424-8220/23/4/1874

B. Bencsik and M. Szemenyei, "Efficient Neural Network Pruning Using

Model-Based

Reinforcement Learning", 2022 International Symposium on Measurement and Control

in Robotics (ISMCR), Houston, TX, USA, 2022, pp. 1–8, doi:

10.1109/ISMCR56534.2022.9950598 https://ieeexplore.ieee.org/document/9950598

Blanka Bencsik and Márton Szemenyei: Efficient Neural Network Pruning Using Model-Based Reinforcement Learning, Proceedings of the Workshop on the Advances in

Information Technology 2022. Budapest, Hungary: OSZK (2022) pp. 15–22.,

References

[1] Song Han, Jeff Pool, John Tran, and William J. Dally. Learning both

weights and connections for efficient neural networks. In Proceedings of the

28th International Conference on Neural Information Processing Systems -

Volume 1, NIPS’15, page 1135–1143, Cambridge, MA, USA, 2015. MIT Press.

[2] Yihui He, Ji Lin, Zhijian Liu, Hanrui Wang, Li-Jia Li, and Song Han. Amc: AutoML for Model Compression and Acceleration on Mobile Devices. In European Conference on Computer Vision, 2018.

[3] Manas Gupta, Siddharth Aravindan, Aleksandra Kalisz, Vijay Ramaseshan Chandrasekhar, and Lin Jie. Learning to prune deep neural networks via reinforcement learning. ArXiv, abs/2007.04756, 2020.

[4] Alexey Bochkovskiy, Chien-Yao Wang, and Hong-Yuan Mark Liao. Yolov4: Optimal speed and accuracy of object detection. ArXiv, abs/2004.10934, 2020.

[5] Hao Li, Asim Kadav, Igor Durdanovic, Hanan Samet, and Hans Peter Graf. Pruning filters for efficient convnets. ArXiv, abs/1608.08710, 2016.

[6] Gongfan Fang, Xinyin Ma, Mingli Song, Michael Bi Mi, and Xinchao Wang. Depgraph: Towards any structural pruning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 16091–16101, 2023.