|

|

BMe Research Grant |

|

Doctoral School of Psychology (Cognitive Science)

Department of Cognitive Science

Supervisor: Dr. TÓTH Brigitta

Learning and brain plasticity as a result of sound localization training

Introducing the research area

Can spatial hearing be improved with training in virtual reality, and if so, what brain cortical processes accompany the development? These are important research questions because determining the location of a sound source – termed sound localization – is an essential skill in daily life, but hearing-impaired people have problems with this skill. In our research, we use the methods of psychology and neuroscience to probe the possibilities of training spatial hearing.

Brief introduction of the research place

We are investigating perceptual learning and the brain plasticity that accompanies it in an electrophysiological laboratory. Besides being soundproof, the room is shielded from external electrical signals, allowing for the examination of brain electrical activity without interference. For this project, we only need VR glasses, loudspeakers, and an electroencephalogram (EEG; Figure 1).

Figure 1: EEG and VR at the research place.

History and context of the research

Spatial hearing is a fundamental ability in everyday life. It helps us locate sound sources – such as an approaching vehicle on the street – and separate different streams of sound – such as the voice of our conversation partner from background noise (Bregman, 1994). Hearing-impaired people’s quality of life is decreased by the impairment of their spatial hearing abilities. To improve their hearing, they can use cochlear implants, which are sound processors implanted into the inner ear. Cochlear implants can restore hearing thresholds to near-healthy levels, but when it comes to spatial hearing, they cannot match the accuracy, efficiency, and flexibility of biological systems (Van Opstal, 2016). The solution to this problem lies not only in the computational development of implants but also in taking advantage of the brain plasticity of the users: With adequate training, people can adapt to novel listening situations (Firszt et al., 2015; Shinn-Cunningham et al., 1998; Valzolgher et al., 2020).

The research goals, open questions

This research project aims to help cochlear implant users improve their sound localization skills in the comfort of their homes. To this end, we are assessing whether a gamified sound localization training program performed in virtual reality can improve spatial hearing, and if so, whether this effect can be generalized to sound localization in real space. Later, we will examine how sound localization training affects brain cortical mechanisms.

Methods

In the current phase of the project, we ask normal-hearing young adults to come to our lab and play with sound localization training tasks in virtual reality. Before and after the training, we measure our participants’ spatial hearing skills with various tests.

The VR sound localization training that we employ is the Both Ears Training Package (BEARS), which was developed by experts in the National Institute for Health and Care Research, Great Britain. We are testing the program with a young adult sample as part of an international cooperation. In the training, participants hear sounds from various virtual directions, and they have to indicate the location of each sound source. They collect scores and other motivating rewards in the virtual environment (Figure 2). This video illustrates the user experience of the game.

Figure 2. The user interface of the VR spatial hearing training.

We evaluate sound localization skills before and after training, both in virtual reality and in the free field. For VR testing, we employ a different program than for the training: here, participants have to indicate the location of white noise bursts without any visual cues about the sound source and with no feedback about their accuracy. In the free field test, we used three loudspeakers (Figure 3): participants listened to short white noise bursts and speech stimuli and decided which loudspeaker played each sound.

Figure 3. Loudspeaker setup in the laboratory.

Our ultimate goal is to develop a training program for cochlear implant users, but as a first step, we are testing the program with normal-hearing participants. Cochlear implants are often used in only one ear, creating a special auditory experience: hearing undergoes an acute change in one of the ears, and the user has to adapt to this situation. This auditory experience is modeled in our normal hearing participants with a simple method: we insert an earplug in their right ear, which is worn throughout training and testing.

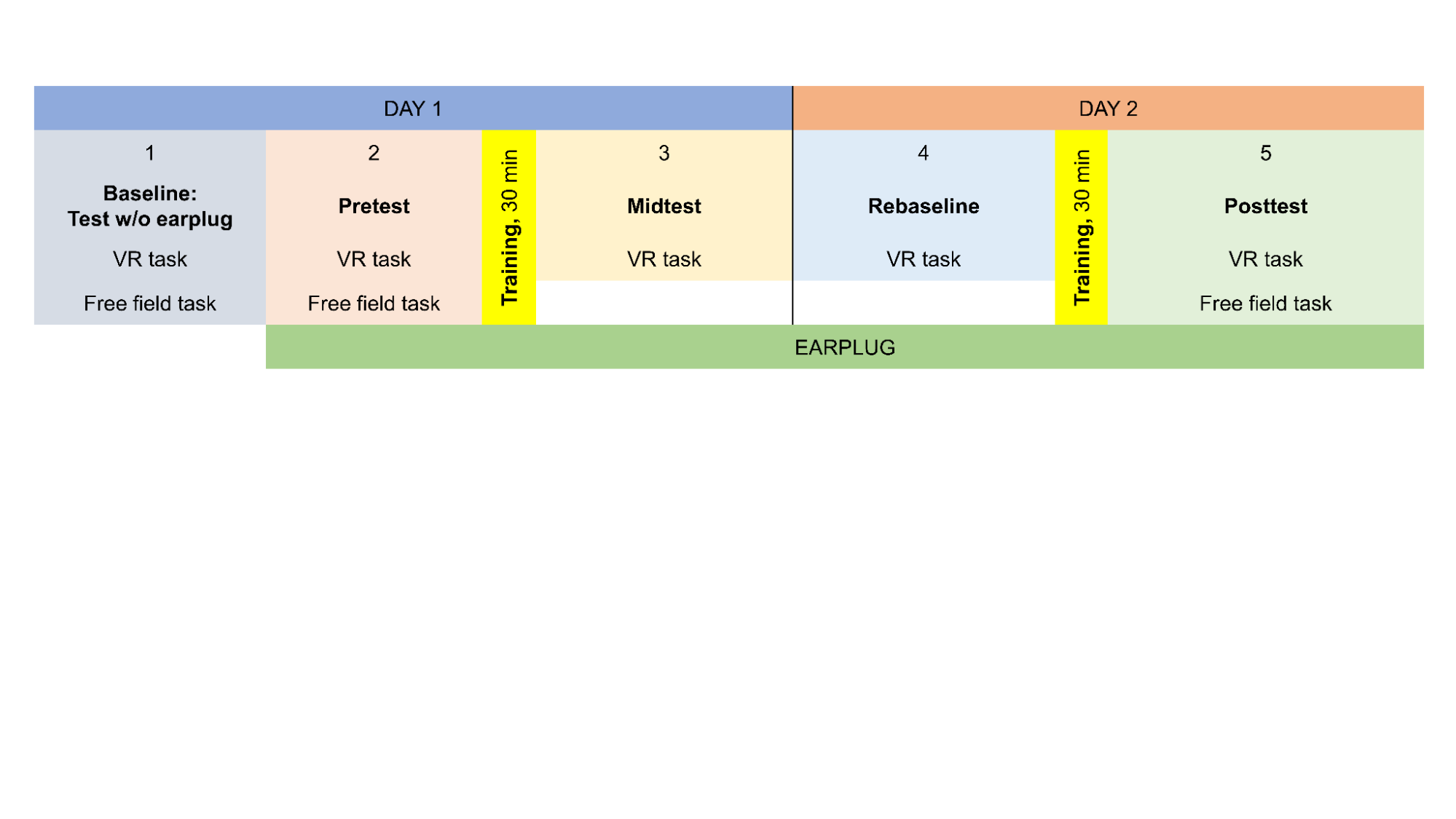

Each participant visits our lab on two consecutive days (Figure 4). On the first day, we asked them to complete the pretests and 30 minutes of VR training, and on the second day, 30 more minutes of VR training and the post-test. To get a detailed picture of the trajectory of development as a result of training, we conduct additional tests throughout the experiment. We start the first day’s tests without an earplug as a baseline, then continue with the earplugged pretests. Next, during the 30 minutes of VR training, participants play 3 different games, each for 10 minutes. The VR localization test is then repeated, which can show us any immediate, short-term change as a result of the first half of the training.

Figure 4. Experimental design.

The second day begins with yet another iteration of the VR localization test as this can show any changes in sound localization occurring after sleep, without additional training. Then we do 30 minutes of training and finish with the two posttests. An earplug is used for every task on the second day.

Results

The results so far show that VR sound localization training can improve spatial hearing abilities, although the question of generalization to free field listening proves to be complicated.

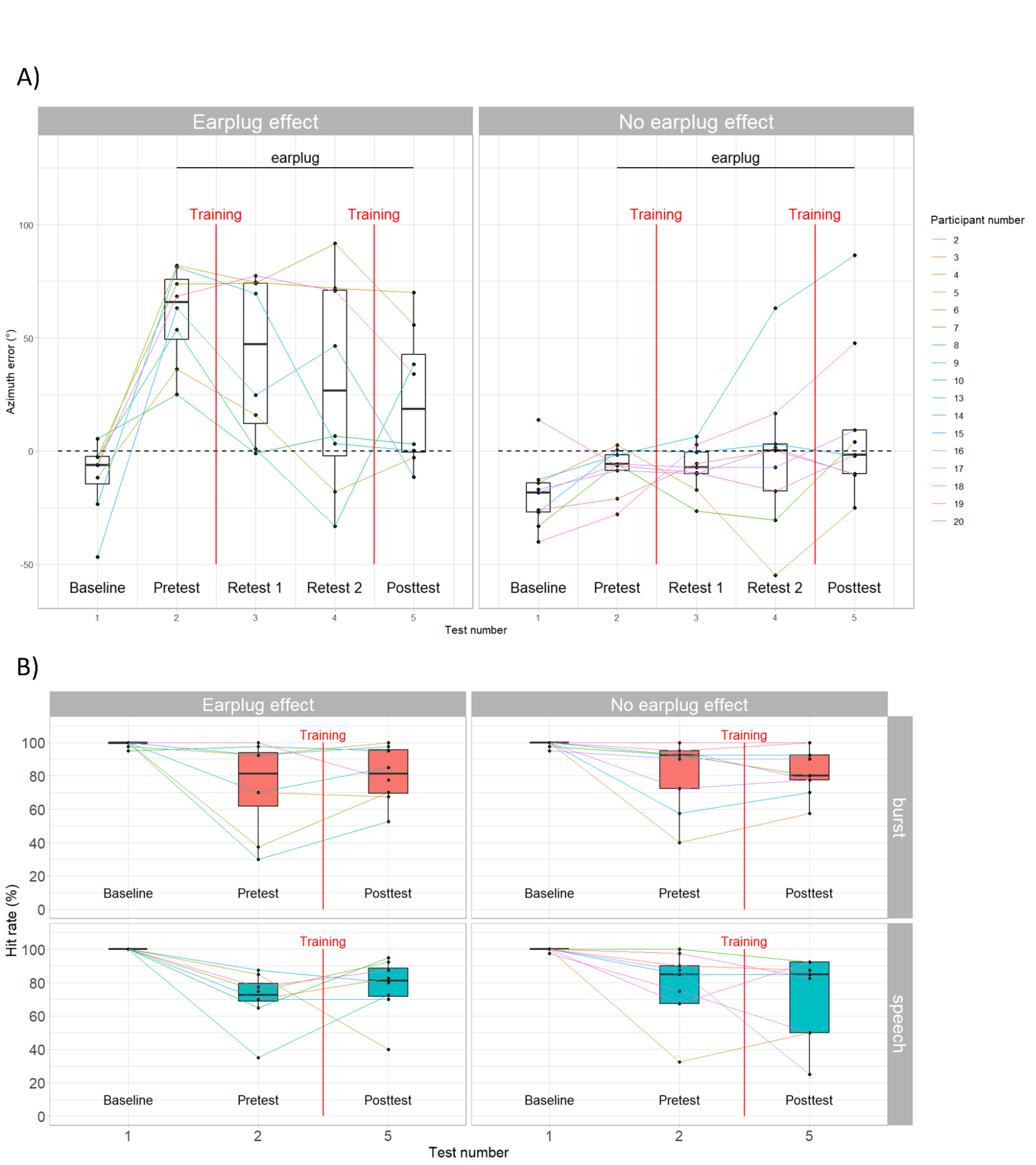

Modeling a hearing impairment by using an earplug was not effective for all of our participants: some of them can localize sounds well even while wearing an earplug. Therefore, we split the participants into two groups: those who decreased their performance as a result of the earplug (that is, the hearing loss modeling was effective), and those who did not.

The VR test measures the angle between the real and perceived location of the sound source, that is, how far apart the listener’s guess and the sound’s actual location were. As a result of the training, this distance approaches zero in the group where the hearing-impairment modeling was successful; that is, sound localization improves as the training program progresses (Figure 5A). There are large differences between individuals, however, and different people have different reactions to the training.

The test conducted in the free field with loudspeakers measures the rate of correctly localized sound sources, out of three choices (hit rate). In this task, people’s performance did not improve much in either group, regardless of whether white noise or speech had to be localized (Figure 5B). This could mean that the effects of VR training do not generalize to listening in the free field – but it could also mean that the test we use does not measure these changes reliably because it does not approach natural listening situations sufficiently. Before we draw any conclusions based on these results, we may have to reconsider this test.

Figure 5. Results so far.

Expected impact and further research

The research topic offers several further opportunities. Firstly, we have to clarify whether the improvements in virtual sound localization can be generalized to real environments. After this, we are planning to use EEG to investigate the brain cortical changes which occur as a result of sound localization training, to uncover the neural mechanisms behind spatial hearing plasticity. We also plan to examine the effect of the training on more complex cognitive processes, specifically on speech processing in noise and multi-talker situations. This ability relies on separating various sound sources, so sound localization training is hypothesized to support efficient speech comprehension in the noisy environments we encounter in our daily lives. Finally, our long-term goal is to provide opportunities for cochlear implant users to develop their spatial hearing abilities with the training.

Publications, references, links

List of corresponding own publications.

Corresponding journal articles:

Kovács, P., Szalárdy, O., Winkler, I., & Tóth, B. (2023). Two effects of perceived speaker similarity in resolving the cocktail party situation–ERPs and functional connectivity. Biological Psychology, 182, 108651. 10.1016/j.biopsycho.2023.108651

Kovács, P., Tóth, B., Honbolygó, F., Szalárdy, O., Kohári, A., Mády, K., Magyari, L., & Winkler, I. (2023). Speech prosody supports speaker selection and auditory stream segregation in a multi-talker situation. Brain Research, 148246. https://doi.org/10.1016/j.brainres.2023.148246

Selected corresponding conference presentations:

Kovács, P., Tóth, B., Szalárdy, O., Winkler, I. (2023, January 13). Speech processing in multi-talker situations: The role of speaker similarity [Poster]. Speech in Noise Workshop, Split, Croatia.

Kovács, P., Tóth, B., Szalárdy, O., Winkler, I. (2023, January 31). Speech processing in multi-talker situations: The role of speaker similarity [Poster and elevator speech]. HunDoC, Budapest, Hungary.

Kovács, P., Tóth, B., Szalárdy, O., Honbolygó, F., Kohári, A., Mády, K., Magyari, L., & Winkler, I. (2022, January 20–21). The role of speech prosody in stream segregation and selective attention in a multi-talker situation [Poster]. Speech in Noise Workshop. https://2022.speech-in-noise.eu/?p=home

Kovács, P., Tóth, B., Szalárdy, O., Honbolygó, F., & Winkler, I. (2021, November 18–19). How prosody helps auditory stream segregation and selective attention in a multi-talker situation [Presentation]. Felelős nyelvészet – Alkalmazott Nyelvészeti Konferencia. http://resling.elte.hu/

Table of links.

● The VR training program (BEARS): https://www.guysandstthomasbrc.nihr.ac.uk/microsites/bears/

● Video about the training environment: https://youtu.be/iV7HnEmCfV8?si=GcDiCwtww5E2Zsh5

● Website of the Sound and Speech Perception Research Group: https://www.ttk.hu/kpi/hang-es-beszedeszlelesi-kutatocsoport/

List of references.

Bregman, A. S. (1994). Auditory scene analysis: The perceptual organization of sound. MIT Press.

Firszt, J. B., Reeder, R. M., Dwyer, N. Y., Burton, H., & Holden, L. K. (2015). Localization training results in individuals with unilateral severe to profound hearing loss. Hearing Research, 319, 48–55.

Shinn-Cunningham, B. G., Durlach, N. I., & Held, R. M. (1998). Adapting to supernormal auditory localization cues. I. Bias and resolution. The Journal of the Acoustical Society of America, 103(6), 3656–3666.

Valzolgher, C., Campus, C., Rabini, G., Gori, M., & Pavani, F. (2020). Updating spatial hearing abilities through multisensory and motor cues. Cognition, 204, 104409.

Van Opstal, J. (2016). The auditory system and human sound-localization behavior. Academic Press.